Playing Android with Firebase ML kit, Tensorflow Lite and ARCore 4

ARCore with TFLite Segmentation in augmented reality

Finally I am ready to play with ARCore. ARCore is a powerful library to implement augmented reality with various platforms: Android, iOS, Unreal and Unity. It provides API’s to understand the real world with camera interface including motion tracking, light estimation and object placement.

Third Chapter: Playing Android with Firebase ML kit, Tensorflow Lite and ARCore 3

You can find plenty of cool examples in the official github and tutorials. To use ARCore, you need supported devices with SDK. Refer here for the quickstart.

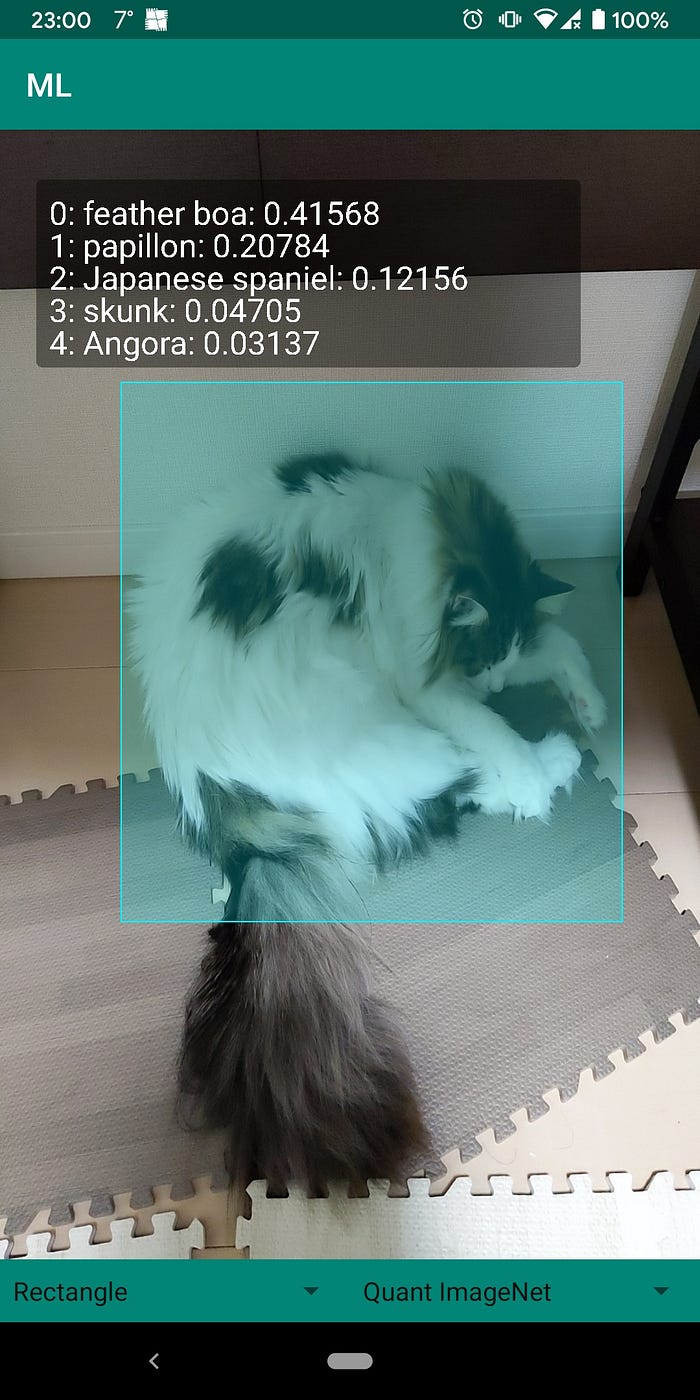

Duplicating object in the camera interface

While there are many examples, I would like to combine segmentation to place a duplication object from the camera to the world, just like below.

To start developing the application, you need to add ARCore dependencies.

build.gradle

dependencies {

implementation 'com.google.ar.sceneform:core:1.14.0'

implementation "com.google.ar.sceneform.ux:sceneform-ux:1.14.0"

}

apply plugin: 'com.google.ar.sceneform.plugin'AndroidManifest.xml

<meta-data

android:name="com.google.ar.core"

android:value="required" />The sceneform in the ARCore is a view interface for real world to connect with augmented objects. Just like this picture shows, you can place 2D and 3D virtual objects in the 3-dimensional world.

I use the sceneform as the interface for this application as well, and self-defined DrawView to capture the objects in the world.

activity_sceneform.xml

<?xml version="1.0" encoding="utf-8"?>

<androidx.constraintlayout.widget.ConstraintLayout xmlns:android="http://schemas.android.com/apk/res/android"

xmlns:app="http://schemas.android.com/apk/res-auto"

xmlns:tools="http://schemas.android.com/tools"

android:layout_width="match_parent"

android:layout_height="match_parent"

android:background="@color/colorPrimary"

tools:context=".SceneformActivity">

<LinearLayout

android:layout_width="match_parent"

android:layout_height="match_parent"

android:orientation="vertical">

<RelativeLayout

android:id="@+id/sceneform_relative_layout"

android:layout_width="match_parent"

android:layout_height="0dp"

android:layout_weight="9"

app:layout_constraintBottom_toTopOf="parent"

app:layout_constraintEnd_toEndOf="parent"

app:layout_constraintStart_toStartOf="parent"

app:layout_constraintTop_toTopOf="parent">

<fragment

android:name="com.shibuiwilliam.firebase_tflite_arcore.ar.SceneformArFragment"

android:id="@+id/sceneform_fragment"

android:layout_width="match_parent"

android:layout_height="match_parent"/>

<com.shibuiwilliam.firebase_tflite_arcore.common.DrawView

android:id="@+id/sceneform_drawview"

android:layout_width="match_parent"

android:layout_height="match_parent"/>

</RelativeLayout>

</LinearLayout>

</androidx.constraintlayout.widget.ConstraintLayout>In the sceneform, you can place a predefined objects, 2D or 3D, as a viewrenderable.

SceneformActivity.kt

override fun onCreate(savedInstanceState: Bundle?) {

super.onCreate(savedInstanceState)

if (checkIsSupportedDeviceOrFinish(this)) {

Utils.logAndToast(this, TAG,"ARcore ready to use", "i")

}

else {

return

}

setContentView(R.layout.activity_sceneform)

arFragment = supportFragmentManager

.findFragmentById(R.id.sceneform_fragment) as SceneformArFragment

arFragment.setOnTapArPlaneListener { hitResult: HitResult,

plane: Plane?,

motionEvent: MotionEvent? ->

placeObject(arFragment!!,

hitResult.getTrackable().createAnchor(

hitResult.hitPose.compose(

Pose.makeTranslation(0.0f, 0.0f, 0.0f))))

}

}What I want to do here, however, is to copy the object in the real world and place it in the world.

Placing object without touching

The example of ARCore deals with touching the screen to place an object, though it is not mandatory to touch to trigger placement. What tough is doing is to anchor where to put the object, so if we can place something with default position anchor, you don’t have to touch the screen.

Here, I am imitating the touch position with frame.hitTest() in the ARFrame to get the center position of the view your camera is watching. With the hit position, I place an anchor and node, which become the actual object, viewRenderable, in the AR world.

SceneformActivity.kt

private fun addDrawable(viewRenderable: ViewRenderable){

val frame = arFragment.arSceneView.arFrame!!

val hitTest = frame.hitTest(frame.screenCenter().x,

frame.screenCenter().y)

val hitResult = hitTest[0]

Log.i(TAG, "${hitResult.distance}, " +

"${hitResult.hitPose.xAxis.asList()}, " +

"${hitResult.hitPose.yAxis.asList()}, " +

"${hitResult.hitPose.zAxis.asList()}")

val modelAnchor = arFragment

.arSceneView

.session!!

.createAnchor(hitResult.hitPose)

val anchorNode = AnchorNode(modelAnchor)

anchorNode.setParent(arFragment.arSceneView.scene)

val transformableNode = TransformableNode(arFragment.transformationSystem)

transformableNode.setParent(anchorNode)

transformableNode.renderable = viewRenderable

transformableNode.worldPosition = Vector3(

modelAnchor.pose.tx(),

modelAnchor.pose.ty(),

modelAnchor.pose.tz()

)

}Since we can anchor a certain position in simulation, what’s needed to duplicate the real object is to capture the object.

AI with AR

No doubt, we can use TFLite with ARCore. Taking classification for instance, since you can see the image via ARCore arFragment interface, you are able to dump the image as a view to convert to a bitmap, thus ready to classify with TFLite.

SceneformActivity.kt

private fun classifyArFragmentView(arFragment: ArFragment) {

val view = arFragment.getArSceneView()

classifySurfaceView(view)

}private fun classifySurfaceView(view: SurfaceView) {

val bitmap = Bitmap.createBitmap(view!!.width,

view.height,

Bitmap.Config.ARGB_8888)

PixelCopy.request(view, bitmap, { copyResult ->

if (copyResult == PixelCopy.SUCCESS) {

Log.i(TAG, "Copied ArFragment view.") val classified = classifierInterpreter!!

.classifyAwait(bitmap)

if (classified == null){

return@request

} val results = classifierInterpreter!!

.extractResults(classified!!) Log.i(TAG, "Classified: ${results}.")

}

}, callbackHandler)

}

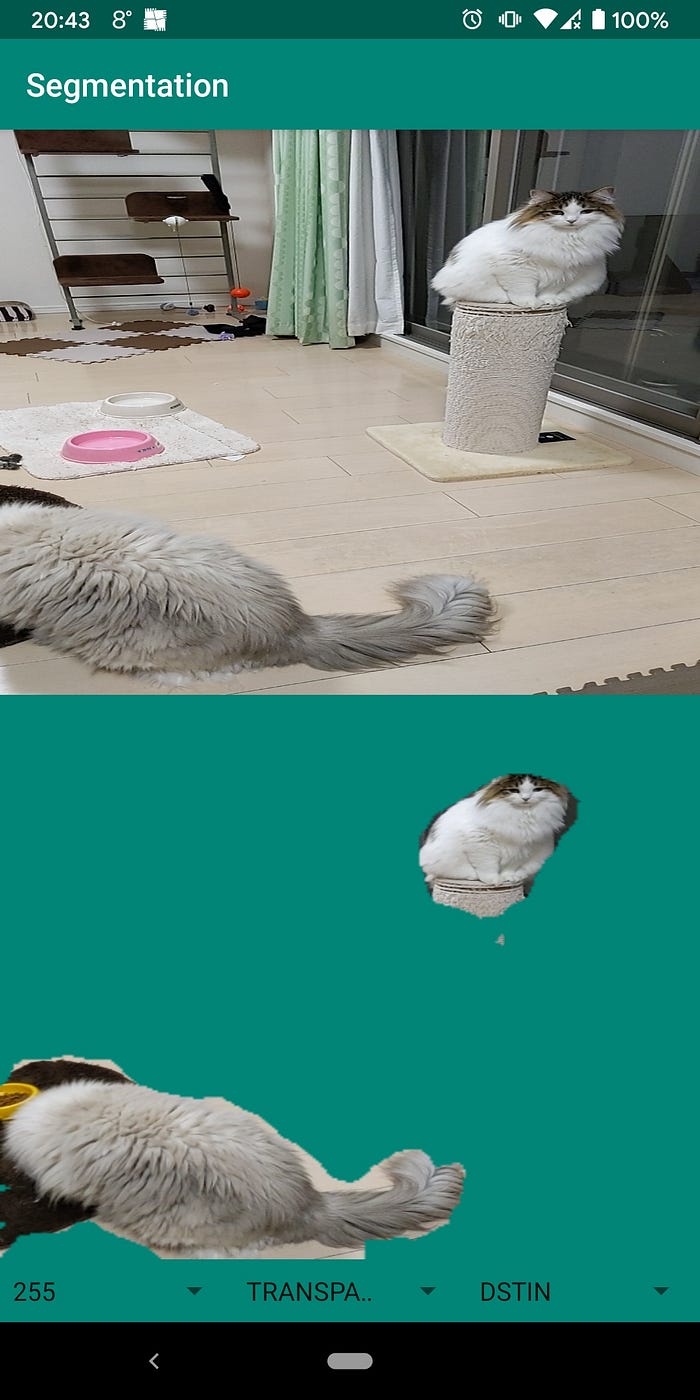

We can say the same stuff with segmentation.

To copy an object in the real world, you have to specify what to copy. It can be accomplished, partly, with capturing the target with a rectangle.

Now targeted the object, we can easily crop the image from the view, and with more effort to delete noisy background. In the picture above, we only need the cat image. No floor or background wall is needed. How can we achieve that? Well, we have segmentation as I explained in the last post. We can segment the target object, and delete the background.

Creating the segmented image is a series of interacting the image data.

SceneformActivity.kt

private fun createSegmentedBitmap(bitmap: Bitmap?){

if (bitmap == null){

Log.i(TAG, "segmentation: bitmap is null")

return

}

val scaledInputBitmap = Bitmap.createScaledBitmap(bitmap!!,

Constants.IMAGE_SEGMENTATION_DIM_SIZE,

Constants.IMAGE_SEGMENTATION_DIM_SIZE,

true)

targetBitmap = null

val segmented = globals!!

.imageSegmentation!!

.segmentAwait(scaledInputBitmap)

if(segmented == null){

Log.i(TAG, "segmentation: segmentation is null")

return

}

val results = globals!!

.imageSegmentation!!

.extractSegmentation(segmented)

val segmentationBitmap = globals!!

.imageSegmentation!!

.postProcess(results)

val output = globals!!

.imageSegmentation!!

.maskWithSegmentation(bitmap,

segmentationBitmap)

setSegmentedBitmap(output)

scaledInputBitmap.recycle()

segmentationBitmap.recycle()

Log.i(TAG, "segment: ${segmentedBitmap!!.width}, ${segmentedBitmap!!.height}")

}

private fun setSegmentedBitmap(bitmap: Bitmap){

segmentedBitmap = bitmap

}With choosing the target, cropping it from the rectangle image and placing it in the pseudo-anchor will make a duplicated object, although in 2-dimensional, in the augmented reality.

Conclusion

With Firebase ML kit, TFLite and ARCore, playing around with AI and AR in smartphone is getting easy to start with. On the other hand, these technologies made us possible to reach the augmentation of the reality as well as creativity to interact with the world in camera-like interfaces.

You can find the whole code of this AI and AR toy in the repository.